In an ultra-competitive telecoms market, each lost customer (churn) weighs heavily: beyond the immediate loss of revenue, replacing a subscriber costs up to five times more than keeping them.

Rather than piling up promotional offers and manual reminders, Big Data and Machine Learning make it possible to anticipate attrition: by analyzing usage history (calls, data, interactions) and weak signals, we identify at-risk customers and direct retention actions where they are really necessary.

In this article, we will first detail the challenges of churn in telecoms – its scale, its financial consequences and the limits of traditional approaches – then quickly describe how to build a predictive churn model using BigData and ML technologies.

Table of content

ToggleI. Understanding the challenge of churn in telecoms: using Big Data to predict churn

Before deploying solutions, it is still necessary to clearly understand the scale of the phenomenon, the marketing approaches traditionally implemented to counter it, as well as the concrete impact of churn on business performance.

1. Churn, an omnipresent phenomenon in a competitive market

The discontinuation of service use by existing customers is a ubiquitous phenomenon in the business world, particularly in highly competitive economic environments.

The telecommunications sector is in constant flux due to the strong attractiveness of this environment.

From this, we understand the extent of the attrition phenomenon in telecommunications companies.

The company's data enthusiasts BAAMTU have implemented a solution aimed, among other things, at reducing this phenomenon of attrition that marketing calls “churn” through the combined use of Big Data and Machine Learning.

2. Customer churn or attrition, a major strategic challenge

Customer attrition can be defined as when a customer permanently suspends the use of services to which they previously subscribed. This suspension can also mean non-use for a given period of time.

The marketing strategies traditionally used by telecommunications companies to manage their customers are often of the order of three:

- the acquisition of new customers,

- upselling to existing customers and

- customer retention.

The use of each of these techniques is linked to a cost. Acquisition occupies a prominent place in the marketing strategies of telecommunications companies.

As long as acquisition continues to monopolize marketing efforts, customer retention will continue to suffer and the attrition rate (churn rate) will continue to increase.

3. The attrition rate: a key indicator of business health

The churn rate is a measure of the number of a company's customers who stop using its services over a given period. Its measurement is very important because its fluctuations can provide information on the "health"—if I may use the term—of the business.

This attrition rate, when left unchecked, reduces the acquisition efforts made by marketing to zero. Hence the need to implement an effective retention policy.

4. Target the right customers for effective marketing actions

Telecommunications companies often have a very large number of customers to manage, and the marketing department therefore cannot afford to focus on each of them in isolation.

But imagine if they could have the information that a customer is at a very high probability of leaving/churn. This can reduce retention-related marketing efforts by redirecting them to le specific type of at-risk customers.

II. Process of setting up a predictive churn model: (Marketing)

The idea is to set up a predictive attrition model, in other words a tool capable of telling us that a given customer will “churn”, stop using services, with a certain score. The objective is to allow a manager to make these predictions interactively with a latency time similar to real time and to have a visual stratification of customers in relation to their appetite scores.

The predictive model to be used will be developed using historical data. By historical data, we mean data from customers who have stopped using the services, as well as data from customers who remain in the service, in accordance with the machine learning ideology.

To achieve this, several technologies and tools must be implemented. We will therefore present them in the next section.

1. Solution architecture: technologies used (Marketing: using Big Data to predict churn)

When it comes to customer knowledge, the more varied and numerous the data describing them, the better! The potential problems associated with storing and processing them are no longer an issue, thanks in particular to the distributed paradigm.

Apache Spark is a big data processing engine offering several processing possibilities including batch data processing, real-time data processing, graph processing and finally machine learning.

Spark's Machine Learning library, Mllib, provides support for several classes of classification, regression, and dimension reduction algorithms, as well as a large number of tools for data preprocessing.

But, we use for the creation of our model the XGBoost library through its JVM packages combined with Apache Spark. This allows us to gain speed during training, the choice of parameters through cross-validation is also done in a distributed manner.

We use Spark through its Scala API.

XGBoost or eXtreme Gradient Boosting is an algorithm based on gradient descent boosting. Boosting, as opposed to bagging, involves sequentially running multiple decision trees, each of which corrects the errors made during the previous iteration. Models are added sequentially until no improvement can be made. The final prediction is obtained by summing the predictions made by the different decision trees.

The term 'gradient boosting' refers to the use of the gradient descent algorithm to minimize loss or error by adding new models.

We often need to provide the end user with the ability to interact with our model either through a web or mobile application or through APIs...

To do this, the trained model needs to be usable on all types of platforms. This is why the MLeap project was created. MLeap is the likely successor to the PMML format, the format for exporting machine learning models in XML format. MLeap offers the serialization of models coded with the Mllib library from Spark, Scikit Learn, Tensorflow in JSON or protobuff format and exports them in a single format called Bundle, usable in particular on platforms running the JVM.

Models can be used independently (without any dependency) from their original training platform (Spark, Tensorflow, Scikit Learn, etc.) by using only the runtime: MLeap Runtime.

We use Spark through its Scala API.

Play is an MVC framework for building web applications.

Play offers support for Java and Scala programming languages.

Additionally, Play! comes packaged with its own server: Akka since version 2.6.X and Netty since its latest version. There is therefore no need to configure a web server in your development and production environment.

Additionally, it offers an Akka-based asynchronous motor.

In our case, we use Play through its Scala API to set up a web application through which a manager can send prediction requests for his clients and visualize the results.

You've probably noticed, but we love Scala. Why? It's actually quite simple:

We positively like this sentence which says:

Il est fonctionnel, il est orienté-objet, il est …, il est tout ce dont vous avez besoin et plus encore !

As you can see, Scala is an object-oriented programming language in the sense that everything is an object, and functional in the sense that every function is a value. Scala has excellent type management support and also offers type inference.

2. The data used to train the model (Marketing: Using Big Data to Predict Churn)

As highlighted above, we are implementing a predictive model of attrition (“churn”), in other words we are proposing a model capable of predicting a certain score of customer appetite to stop using the services. The model produced is the result of supervised learning and more precisely of a binary classification and therefore required labeled data (informing on the variable to be predicted).

CHAMP | DESCRIPTION |

Account Length | Equivalent to the client's seniority in the business |

VMail Message | Aggregation of customer voicemail messages |

Day Minutes | Cumulative minutes of customer actions during the day |

Eve Minutes | Cumulative minutes of customer actions during the evening |

Night Minutes | Cumulative minutes of customer actions during the night |

International Minutes | Accumulation of minutes of the client's international actions |

Customer Service Calls | Accumulated customer calls to customer service |

Churn | Target variable that we are trying to predict, churner client or not |

International Plan | Does the customer have International service? |

Vmail Plan | Does the customer have voicemail service? |

Day Calls | Cumulative number of customer calls during the day |

Day Charge | Accumulation of the credit amount of the customer's actions during the day |

Eve Calls | Cumulative number of customer calls during the evening |

Eve Charge | Accumulation of the credit amount of the customer's actions during the evening |

Night Calls | Cumulative number of customer calls during the night |

Night Charge | Accumulation of the credit amount of customer actions during the night |

International Calls | Accumulated international customer calls |

International Charge | Accumulation of the credit amount of the customer's international actions |

State | Customer's original state |

Area Code | Area Code |

Phone | Customer phone number |

This data includes some categories of customer data commonly used in telecommunications companies, namely:

- Usage data: any data relating to the customer referring to their use of services, calls, voice messages, internet services, etc.

- Interaction data: providing information on customer interactions with services such as customer service, call centers, etc.

- Contextual data: any other data that can characterize the customer, such as personal data.

3. Functional architecture

It can be noted that contextual data such as state, area code and phone are not very significant in the problem we are trying to solve. These variables have therefore been excluded from the process.

The tools are for managing the different tasks involved in setting up a machine learning model.

We will therefore no longer have to work with categorical values in the process of building our model.

As mentioned above, Spark provides tools to manage the different tasks involved in setting up a model:

- The Transformers are used when converting or modifying features

- The Extractors help extract features from raw data

- The Selectors provide a means to select a given number of features from a larger set of features

- The Estimators constitute the abstraction of the concept of machine learning algorithm which is used to produce a model by application on data

III. Data processing and modeling

Our first step is to do some exploratory tasks on the data: numerical statistics on the different columns of numerical variables, study of correlation and intercorrelation and predictive power of each variable.

These tasks aim to provide a fairly good understanding of the data provided to us.

During preprocessing, we perform bucketing of the columns of continuous variables. The transformer Bucketizer Apache Spark transforms a column of continuous features into another made up of 'buckets' that are specified by the user.

We finally create a single vector of features using the transformer VectorAssembler of Apache Spark. This allows us to perform dimension reduction using the transformer PCA from Apache Spark.

1.L’Analyse en Composantes Principales (PCA) (Marketing : utiliser le Big Data pour prédire le churn)

Principal Component Analysis (PCA) is a statistical procedure using an orthogonal transformation to convert a set of correlated observations into a set of uncorrelated observations called principal components.

val assembler = new VectorAssembler(« Churn_Assembler »)

.setInputCols(assemblerColumns.toArray)

.setOutputCol(« assembled »)

stages :+= assembler

val reducer = new PCA(« Churn_PCA »)

.setInputCol(assembler.getOutputCol)

.setOutputCol(« features »)

.setK(reducer_num_dimensions)

stages :+= reducer

The estimator used is the XGBoostClassifier class from the XGBoost classification package. It is given the appropriate parameter values obtained after cross-validation. For an explanation of the meaning of these parameters and more, visit the website XGBoost.

val xgbParam = Map(

« eta » -> booster_eta,

« max_depth » -> booster_max_depth,

« objective » -> booster_objective,

« num_round » -> booster_num_round,

« num_workers » -> booster_num_workers,

« min_child_weight » -> booster_min_child_weight,

« gamma » -> booster_gamma,

« alpha » -> booster_alpha,

« lambda » -> booster_lambda,

« subsample » -> booster_subsample,

« colsample_bytree » -> booster_colsample_bytree ,

« scale_pos_weight » -> booster_scale_pos_weight,

« base_score » -> booster_base_score

)

val xgbClassifier = new XGBoostClassifier(xgbParam)

.setFeaturesCol(reducer.getOutputCol)

.setLabelCol(« label »)

Le classifieur est alors ajouté à nos stages pour constituer le pipeline final :

stages :+= xgbClassifier

val pipeline = new Pipeline(« Churn_Pipeline »).setStages(stages)

Le modèle est obtenu par application de ce pipeline sur les données d’entraînement. Ce modèle obtenu est exporté sous forme d’un Bundle Mleap :

implicit val sbc : SparkBundleContext = SparkBundleContext()

.withDataset(model.transform(train))

(for (bundle <- managed(BundleFile(« jar:file:/tmp/churn-model-protobuff.zip »))) yield {

model.writeBundle.format(SerializationFormat.Protobuf).save(bundle).get

}).tried.get

Le modèle exporté sera utilisé dans notre application web pour effectuer la prédiction de churn pour les ‘nouveaux’ clients “en ligne”.

val dirBundle : Transformer= (for(bundle <- managed(BundleFile(« jar: »+cwd+« conf/resources/churn-model-protobuff.zip »))) yield {

bundle.loadMleapBundle().get.root

}).tried.get

val transformedLeapFrame = dirBundle.transform(leapFrame).get

2. Result: Adding customers and predicting their churn risk

Eventuellement, nous aurons besoin d’ajouter les informations des clients à ‘scorer’. To do this, you can add them individually or add several at once by uploading a file. As a reminder, since the model is based on machine learning, to use it, it must be given data with the same name and containing the same number of variables as those used during training.

The next step after adding customers is the actual prediction using the previously exported model.

The data below are output by the model. They provide information on the customer's probability and confidence of belonging to each of the two classes, in other words, the customer's appetite score for ‘churner’ or not.

Assign a score to your customers to predict churn and take tailored actions

Depending on these values, the client is predicted to be a potential churner or not in respect of the chosen threshold.

Eventually these predictions can be saved in a database for later use.

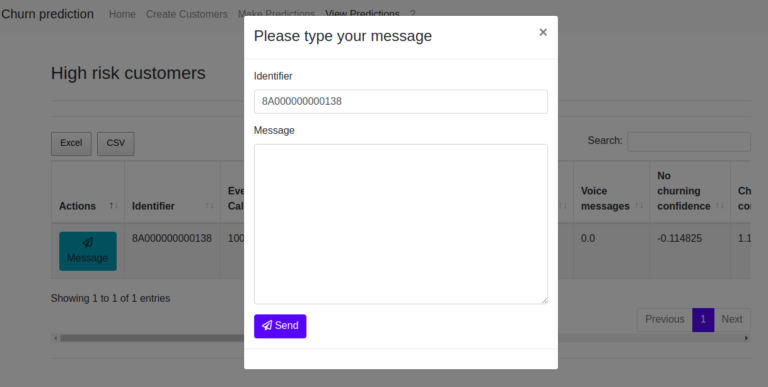

Depending on their appetite scores ‘churner’, customers can be classified as 'high risk', 'medium risk' or 'low risk' customers.

This segmentation can help redirect retention marketing efforts more precisely to targeted customers, such as sending targeted messages:

In short, the phenomenon of ‘churn’ is omnipresent in telecommunications, particularly due to the strong competitiveness that reigns there. Thinking about this phenomenon in a different way by advocating retention could prove very beneficial. Through prediction, the use of machine learning techniques coupled with Big Data can help redirect marketing strategies aimed at retention towards the right customers.

On another dimension, considering that a customer is influenced by his network (set of other customers with whom he often communicates), if certain members of his network “churnent”, it is very likely that he will follow.

Considering information not only limited to its interaction with services but also adding information related to its interaction with its customer network can help increase the performance and accuracy of a customer attrition predictive model.

Anticipating churn using Big Data provides marketing with a compass to better understand customer behavior, take targeted action, and strengthen loyalty. This approach is part of a broader dynamic of data valuation, where every piece of information becomes an opportunity for action.

Baamtu supports companies in implementing tailor-made solutions, from needs analysis to the deployment of operational models.

So check out our article on proactive customer relationship management using chatbots, : CLICK HERE to read the article.