The scoring for credit risk is becoming a major lever of innovation for financial institutions. Indeed, with the rise of technologies and data, this area is undergoing a significant transformation.

Moreover, le Big Data, due to the increased growth of multiple data sources (structured or not) is becoming the main driver of innovation in the banking sector – and it is becoming so.

Thus, investments in big data analysis in the banking sector totaled $20.8 billion in 2016. This makes the sector one of the main consumers of Big Data services and an increasingly hungry market for Big Data architects, solutions and tailor-made tools.

Le machine learning thus makes it possible to exploit these large masses of data in banking application fields such as credit risk.

Table of content

ToggleI. Predictive analysis to better assess customer risk (scoring for credit risk)

Applying machine learning techniques to individual customer data, combined with more traditional data, now allows artificial intelligence to identify strategies that banks use to manage credit risk and to report on the different perspectives they may have on the types of risks that pose a threat to them.

L’Artificial intelligence is applied to better assess risk at the individual customer level. This can then be used to estimate more complex risks such as measuring the credit risk of an entire economy ( Scoring for credit risk).

The big data trend

According to IDC, the total volume of digital data in the world will reach 175 zettabytes (Zo) in 2025, compared to around 33 Zo in 2018, a multiplication by more than 5 in 7 years.

Banking data will be a cornerstone of this data deluge, and whoever can process it will have a great competitive advantage. par rapport à la concurrence.

Let’s take a look at a concrete example of the use of Machine Learning in the banking sector, more specifically for credit risk modeling (Scoring for credit risk).

The dataset

The German Credit dataset (banking data in Germany) contains 1000 rows with 20 variables. In this dataset, each entry represents a person who receives credit and is classified into good or bad payers via the variable “creditability” based on the set of explanatory variables. Our goal in this project is therefore to implement a score to model the variable of interest.

Frequency of bank default

As you can see, our customer database is full of more than 30% of customers with bank defaults, which is quite high.

Score Modeling

Credit scoring is the process of assigning or rejecting a credit application based on a customer's score. Anyone who has ever borrowed money to apply for a credit card or purchase a car, home, or other personal loan has a credit report. Lenders use scores to determine who qualifies for a loan, at what interest rate, and what credit limits. The higher the score, the more confident a lender can be in the customer's creditworthiness. However, a score is not part of a regular credit report. There is a mathematical formula that translates credit report data into a three-digit number that lenders use to make credit decisions.

The aim here is to use credit assessment techniques that allow the risk associated with lending to a particular customer to be assessed and a scoring model to be developed. Credit scoring involves applying a statistical model to assign a risk rating to a credit application and is a form of artificial intelligence, based on predictive modeling, which assesses the likelihood that a customer will default. Over the years, several different modeling techniques for implementing credit scoring have evolved. Despite this diversity, the Scorecard scoring model stands out and is used by nearly 90% of companies. The use of new machine learning methods allows for hybrid probabilistic models for estimating customer risk.

II. Implementation (scoring for credit risk)

Before building the model for Credit Risk Scoring, two steps are necessary. One is to calculate the Woe (Weight of Evidence), the other step is to calculate the Informative Value (IV) depending on the value WoE. For verification of results we use the values WOE. Après avoir divisé les variables continues et discrètes en catégorielles pour chaque valeur prise, nous pouvons calculer leur WoE, puis les variables catégorielles en question sont remplacées par leur WoE qui peuvent être utilisées ultérieurement pour construire le modèle de régression.

Le Woe (Weight of Evidence)

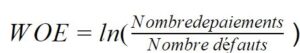

The formula of WoE is as follows:

The calculation of WoE for each categorical variable, allows to see an overall trend of its logic, and there are no anomalies within the data. Because the logical dependencies between WOE and default ensure that the weighting of the score is perfectly interpretable, because these points reflect the logic of the model.

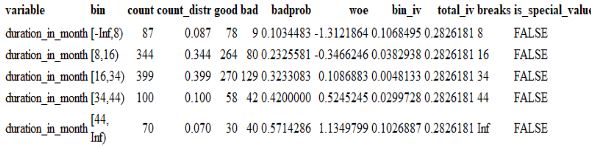

WOE of duration_in_month variable

WOE of duration_in_month variable

Variable selection via Information Value (IV)

The value of Informative comes from information theory and is measured using the following formula. It is used to assess the overall predictive power of a variable.

| Information Value (IV) | Predictive Capacity |

| < 0.02 | useless for prediction |

| 0.02 – 0.1 | weak predictor |

| 0.1 – 0.3 | average indicator |

| 0.3 – 0.5 | good predictor |

| > 0.5 | Excellent too good to be true (to be verified) |

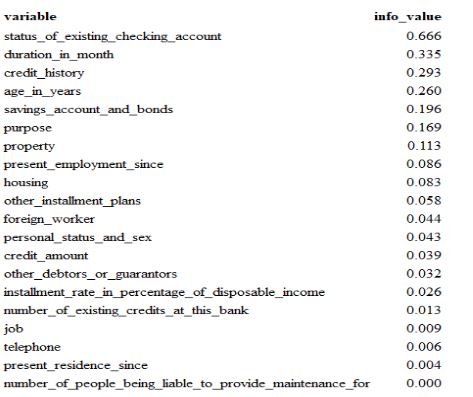

– Results IV on our data

iv = iv(data, y = 'creditability') %>%

as_tibble() %>%

mutate( info_value = round(info_value, 3) ) %>%

arrange( desc(info_value) )

iv %>%

knitr::kable()

We reduce the variables that enter our feature selection process by filtering out all variables with IV < 0.02.

Logistic Regression on Transformed Variables

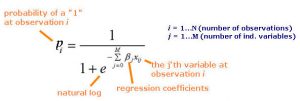

Nous appliquons maintenant la logistique regression on our transformed data.

Nous appliquons maintenant la logistique regression on our transformed data.

Estimated parameters of Logistic regression

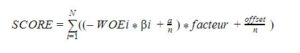

Calculation of points from logit & Odds (scoring for credit risk)

We recall that the logit can be represented by:

![]()

The logit is therefore the logarithm of the ratio of the probability of defaulting to the probability of not defaulting.

Logistic regression models are linear models, in that the logit-transformed prediction probability is a linear function of the values of the predictor variables. Thus, a scoring model thus derived has the desirable quality that credit risk is a linear function of the predictors, and with some additional transformations applied to the model parameters, a simple linear function of the WOE which can be associated with each class value. The score is therefore a simple sum of the point values of each variable that can be taken from the resulting point table.

The total score of a requesting customer is then proportional to the logarithm of the odds ratio of bad_customer / good_customer.

We choose to scale the points so that a total score of 600 points corresponds to a bad_customer / good_customer rating of 50 to 1 and that an increase in the score of 20 points corresponds to a doubling of the latter.

- Scaling: The choice of the point scale (600) of the score does not affect the modeling.

- odds0=20 : a customer with a score of 600 has a chance ratio of 1/20).

- pdo = 50, an increase of 50 points doubles the odds (p/1-p).

- As 600 = log(50)*factor + offset and on the other hand 650 = log(100)*factor + offset So factor = pdo / ln(2).

- Offset = Score – [Factor * ln(Odds)]

points0 = 600

odds0 = 20

pdo = 50card = scorecard( bins , m

, points0 = points0

, odds0 = 1/odds0 # scorecard wants the inverse

, pdo = pdo

)

sc = scorecard_ply( data, card )

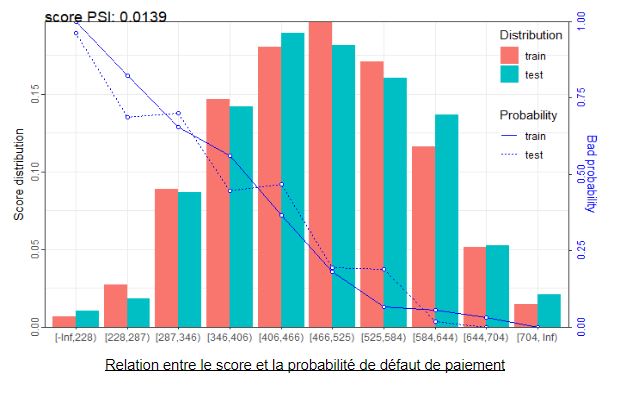

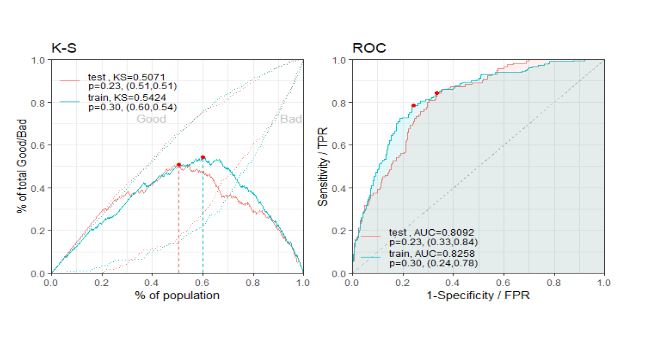

III. Kolmogorov-Smirnov test for validation (credit risk scoring)

In general, the distribution of scores for good customers differs statistically significantly from the distribution of “bad” customers if the KS statistic is larger than the critical threshold value. Here we reject the null hypothesis (that good and “bad” customers have the same score distribution) based on the results of the tests on the train and test data.

Generally, the threshold for accepting a loan varies from one loan type to another and from one lender to another. Some loans require a minimum score of 520, while others can accept scores lower than 520. Therefore, after obtaining the cutoff score, we can then decide whether or not to approve the loan. Overall, predictive models are based on using a customer’s historical data to predict the likelihood that that customer will engage in a defined behavior in the future. They not only identify “good” and “bad” applications on an individual basis, but they also predict the likelihood that an application with a given score will be “good” or “bad.” These probabilities or scores, along with other business considerations, such as predicted approval rates, profit, churn rate, and loss, then serve as the basis for decision-making.

That’s all about this Credit Risk Scoring project.

IV. Let’s put scoring to work for your decisions

👉 Contact our experts by clicking here and find out how Baamtu can help you improve your credit risk assessment process using data and artificial intelligence.

We recently wrote an article about decision-making with business intelligence, Click here to read